Bashar's Blog

Talks around Computer Science and Stuff

AWS CloudHSM workshop

In: AWS

21 Apr 2021AWS CloudHSM is a cloud-based hardware security module (HSM), allowing to easily generate encryption keys. AWS manages the hardware security module (HSM) appliance, but does not have access to your keys. Customers control and manage their own keys. A cluster of CloudHSM appliances can be provisionned within a VPC and requests are automatically load balanced across the different HSMs. There are no upfront cost to use AWS CloudHSM with per hour billing.

If you are interested in getting some hands on experience on how it works and how to use AWS CloudHSM, I have created a workshop that will guide you through that journey. You can try it here:

https://github.com/alfallouji/CLOUDHSM-WORKSHOP

You will go through the steps to create, deploy and configure your own CloudHSM cluster and how to perform encryption operations.

Have fun !

- No Comments

- Tags: AWS, CloudHSM

Optimizing definition of inbound rules for security groups in AWS

In: AWS

11 Apr 2021Introduction

In a perfect world, we should always protect the network by avoiding unnecessary open network ports. Protecting a resource (such as an EC2 instance) in AWS can be done by using a security group, which acts as a virtual firewall to control incoming and outgoing traffic.

However, there are cases where defining the inbound rules of a security group can be challenging and a compromise is needed. Some applications may use thousands of ports; the Ops team may have limited documentation on what ports are actually needed and should be accessible. We also have to consider certain limits regarding security groups (e.g. default limit of 60 maximum inbound rules per security group).

Therefore, there is a need to better understand the application requirements and identify what ports are being used by a certain workload. This is where we can leverage VPC Flow Logs. It is a feature that captures logs about the IP traffic going to and from network interfaces in a VPC. Flow logs data can be published to Amazon CloudWatch Logs or to Amazon S3. You can create a flow log for a VPC, a subnet, or a network interface. Here is an example of a flow log record :

2 123456789010 eni-1235b8ca123456789 172.31.16.139 172.31.16.21 20641 22 6 20 4249 1418530010 1418530070 ACCEPT OKIn this example, we can read that SSH traffic (destination port 22, TCP protocol) to network interface eni-1235b8ca123456789 in account 123456789010 was allowed.

VPC Flow Logs Analyzer is a little experiment that I worked on (an open source tool written in Python). It analyzes a VPC Flow Logs and suggest a set of optimized port ranges and individual ports that will cover all the source ports used for a specific ENI (Network interface). This result can serve as a base to create the inbound rules of your security groups.

I experimented two different implementations. The first leverages Amazon Athena, which is an interactive query services that makes it easy to analyze data in Amazon S3 using standard SQL. The second implementation uses Amazon CloudWatch Logs, which can centralize logs from different systems and AWS services; it can be queried using CloudWatch Logs Insights.

Let’s see it in action

Let’s assume that the VPC Flow Logs returned the following source port used by a specific ENI :

80

81

82

85

86

1001

1002

1020

Running the tool with maxInboundRules of 3 and maxOpenPorts of 3 (maximum number of open ports left within a single range or inbound rule) gives the following result :

ranges :

- from 80 to 86

- from 1001 to 1002

individual ports :

- 1020

extra/unused ports (ports that are open and shouldnt) :

- 83, 84

In the previous example, we end up with two ranges and one individual port. There are 2 ports that would be open and shouldn’t (ports 83 and 84). This is a compromise that we may decide to accept if we want to only have a maximum of 3 inbound rules defined in the security group.

If we decide to run the optimizer with maxInboundRules = 10 and maxOpenPorts of 3, we get the following result :

ranges :

- from 80 to 82

- from 85 to 86

- from 1001 to 1002

individual ports :

- 1020

extra/unused ports (ports that are open and shouldnt) :

- (none)

In this case, the optimizer is suggesting three ranges and one individual port. This means a total of four inbound rules (which respects our constraints of being below 10 rules). There is no extra port left open.

If we decide to run the optimizer with maxInboundRules = 1 and maxOpenPorts of 3, we get the following result :

Couldnt find a combination - you may want to consider increasing

the values for maxInboundRules and/or maxOpenPorts

The optimizer has no magic power (at least yet) and is simply unable to come up with a solution with those constraints.

If we decide to run the optimizer with maxInboundRules = 1 and maxOpenPorts of 1000, we get the following result :

ranges :

- from 80 to 1020

individual ports :

- (none)

extra/unused ports (ports that are open and shouldnt) :

- 83, 84, 87, 88, 89, 90, ..., ..., 999, 1000, ..., ..., 1018, 1019

In this example, the optimizer is giving one single range (as requested). However, there will be 933 ports left open that shouldn’t (which is below the value of maxOpenPorts of 1000).

What’s next?

We saw different ways to leverage and query VPC Flow Logs, using Amazon Athena and AWS CloudWatch Logs Insights. It becomes quite easy to identify traffic usage and build tools such as the vpc-flowlogs-analyzer to better understand the network requirements of a specific workload.

From a cost perspective, you may want to consider the following to keep your cost low:

- Only target aspecific ENI’s for the VPC Flow Logs;

- Don’t retain the data indefinitely; you can change the retention period of your CloudWatch Logs or set up a lifecycle configuration rule in S3;

- AWS Athena and CloudWatch Logs Insight queries pricing are based on the queries that you run (amount of data being processed).

I believe there is value to run such analysis to improve the security posture of a workload running on AWS. I am already thinking of some of the enhancements, such as :

- Analyzing VPC flow logs along with existing security groups inbound rules and trying to come up with recommendations (e.g. there is no traffic on port X, however, security_group_1 has port X opened).

- Analyzing existing security groups and provide recommendation to merge some of them or reduce their numbers (less code is always better).

Links

Source code and documentation can be found here : https://github.com/alfallouji/AWS-SAMPLES/tree/master/vpc-flowlogs-analyzer

- No Comments

- Tags: AWS, Security

Generating set of data

In: AWS

15 Nov 2017I just released a little project in Github that provides an easy way to generate data sample and push them to something like a AWS Kinesis stream. This is quite handy if you need for example to build a POC or a demo and require some data set.

Here is the link to the github project : https://github.com/alfallouji/ALFAL-AWSBOOTCAMP-DATAGEN

Or to make it easier, you can click here to deploy it on AWS.

Please keep in mind the following. This code is provided free of charge. If you decide to deploy this on AWS (using the cloudformation script), you may incur charges related to the resources you are using in AWS (e.g. EC2, S3, Kinesis, etc.).

The structure of the generated data can be defined within a configuration file.

The following features are supported :

- Random integer (within a min-max range)

- Random element from a list

- Random element from a weighted list (e.g. ‘elem1’ => 20% of chance, ‘elem2’ => 40% of chance, etc.)

- Constant

- Timestamp / Date

- Counter (increment & decrement)

- Mathematical expression using previously defined fields

{{field1} + {field2} / 4) * {field3}) - Conditional rules :

{field3} equals TRUE if {field1} + {field2} < 1000{field4} equals FALSE if {field1} + {field2} >= 1000 - Any of the feature exposed by

fzaninotto/fakerlibrary - Ability to defined the overall distribution (e.g I want 20% of my population to have a value of ‘Y’ for {field3}). The generator will run until it meets the desired distribution.

Here is an example of a configuration file :

// Define the desired distribution (optional)

'distribution' => array(

// We want to have 30% of our distribution with a value of 'Y'

// for the result field and 70% with a value of 'N'

'result' => array(

'Y' => 3,

'N' => 7,

),

),

// Define the desired fields (mandatory)

'fields' => array(

// You can use date function and provide the desired format

'time' => array(

'type' => 'date',

'format' => 'Y-m-d H:i:s',

),

// Randomly pick an integer number between 10 and 100

'field1' => array(

'type' => 'randomNumber',

'randomNumber' => array(

'max' => '100',

'min' => '10',

),

),

// Field2 is a constant equalts to 1000 (could be any string)

'field2' => array(

'type' => 'constant',

'constant' => '1000',

),

// Randomly pick an element from a defined list of values

'field3' => array(

'type' => 'randomList',

'randomList' => array(

'us',

'europe',

'asia',

),

),

// Pick an element from a weighted list

'field4' => array(

'type' => 'weightedList',

'weightedList' => array(

'men' => 40,

'women' => 60,

),

),

// You can use mathematical expression

'field5' => array(

'type' => 'mathExpression',

// Value => condition

'mathExpression' => '{field1} + {field2} + sin({field2}) * 10',

// You can use any of the faker feature

'field6' => array(

'type' => 'faker',

'property' => 'name',

),

'field7' => array(

'type' => 'faker',

'property' => 'email',

),

'field8' => array(

'type' => 'faker',

'property' => 'ipv4',

),

// You can define conditonnal rules to be evaluated in order to get the value

// if this condition is true :

// {field1} + {field2} > 1060, then the value for {result} is 'Y'

'result' => array(

'type' => 'rules',

// Value => condition

'rules' => array(

'Y' => '{field1} + {field2} > 1060',

'N' => '{field1} + {field2} <= 1060',

),

),

),All 5 AWS Certifications done

In: AWS

12 Sep 2017I just got the AWS DevOps Engineer Professional Certification. That’s all 5 AWS certifications !

AWS Solution Architect Professional Certification

In: AWS

1 Sep 2017I just passed the AWS Certification SA Pro Certification.

One more left !

One more – three left

In: AWS

15 Aug 2017This one was easier to get than the Solution Architect one.

Just got my AWS Solutions Architect Certification

In: AWS

14 Jul 2017I am going to switch job in a few weeks. As I mentioned, I decided to take the AWS Solution Architect Certification exam before starting on that new position.

In case of you want to do the same, those course notes were a nice summary of what is covered during the exam.

AWS Solution Architect Certification

In: AWS

5 Jun 2017I started preparing to pass the AWS Solution Architect Certification.

One of my colleague at Origin just recently re-passed the exam. He was nice enough to share his course notes on his blog at : http://www.beddysblog.com/marks-aws-solution-architect-course-notes-aprmay-2017/

I just went through them and they are pretty useful. I encourage you to take a look if you are interested to pass the AWS Solution Architect certification also.

- No Comments

- Tags: AWS, Certification

In this first article, we will talk about how to integrate a strong and flexible search engine within your web application. There are various open source search engine available in the market. This talk will be about Solr. I have been using it for different projects and it offers a nice solid set of features.

What is Solr ?

Solr is an open source enterprise search server based on the Lucene Java search library, with XML/HTTP and JSON APIs, hit highlighting, faceted search, caching, replication, a web administration interface and many more features. It runs in a Java servlet container such asTomcat.

Source : http://lucene.apache.org/solr/

What features does it offer ?

Solr is a standalone enterprise search server with a web-services like API. You put documents in it (called “indexing”) via XML over HTTP. You query it via HTTP GET and receive XML results.

Advanced Full-Text Search Capabilities

Optimized for High Volume Web Traffic

Standards Based Open Interfaces – XML,JSON and HTTP

Comprehensive HTML Administration Interfaces

Server statistics exposed over JMX for monitoring

Scalability – Efficient Replication to other Solr Search Servers

Flexible and Adaptable with XML configuration

Extensible Plugin Architecture

Source: http://lucene.apache.org/solr/features.html

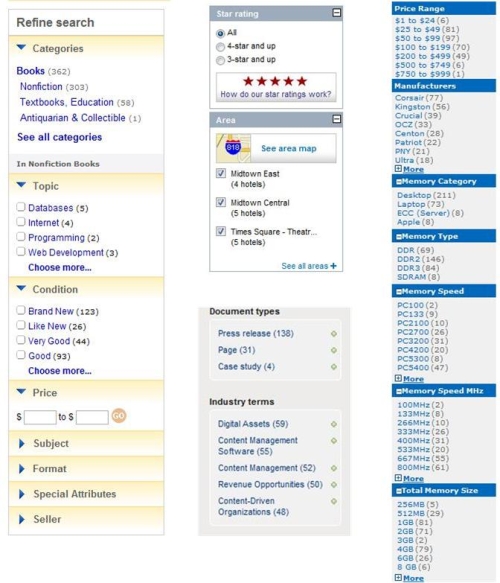

One of the “sexy features” offered by Solr is faceted search. If you are not familiar with that notion. You will often see facets in e-commerce sites.

Faceted navigations are used to enable users to rapidly filter results from a product search based on different ways of classifying the product by their attributes or features.

For example: by brand, by sub-product category, by price bands

Source : http://www.davechaffey.com/E-marketing-Glossary/Faceted-navigation.htm

It helps a lot when user are searching. Here are some examples of facets displayed on various websites.

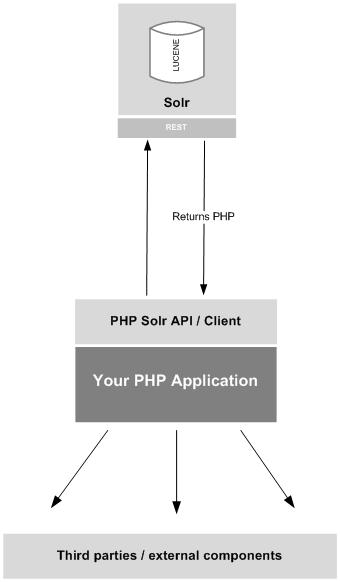

How to use Solr with a PHP application ?

Solr has a full Rest interface, making it very easy to talk with.

It can output response in different format (XML, JSON, etc.), it can even output response in PHP or PHPS.

- PHPS = Serialized PHP

- PHP = PHP code

We will discuss later how you can enable and use this feature.

Some open source libraries are available in PHP. There is also a PHP extension for Solr.

- PHPSolrClient

- SimpleSolr

- Solr PHP extension

In our this article, we will use a custom made client (named SimpleSolr). The SimpleSolr class is available for download but as said earlier there are many existing frameworks or libs that can be used for that purpose. I personally decided to build my own little class for the purpose to learn more about the Solr API.

We will assume in this tutorial that you have a functional Apache / PHP 5.2+ installation ready also we will assume you are running with a Unix platform. The first thing you need to do, is to install Solr.

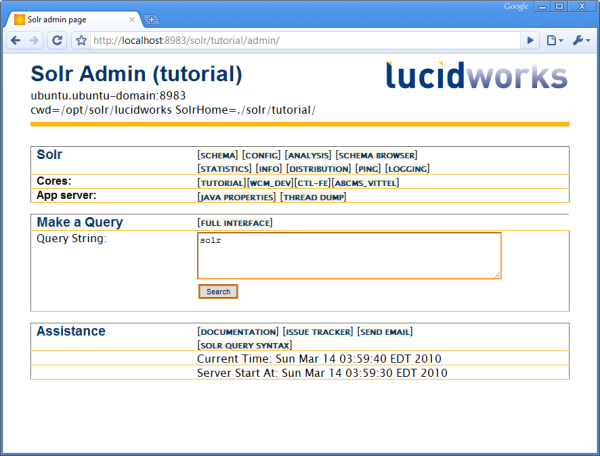

Installing Lucidworks Solr

We recommend using the lucidworks package for Solr. Based on the most latest stable release of Apache Solr, it includes major new features enhancements. For further details, you can check their website (www.lucidimagination.com). Here is the URL to download it: http://www.lucidimagination.com/Downloads/LucidWorks-for-Solr

Installing Solr is very easy, Lucidworks offers an installer (that run with Windows and Linux since it is a .jar)

You will need to install JRE, for all the details on how to install lucidworks, please refer to this documentation : http://www.lucidimagination.com/search/document/CDRG_ch02_2.1

Whenever you are ready go to the folder where Solr is installed, to see all the option, you can type this :

sh lucidworks.sh –help

Using CATALINA_BASE: /var/www/solr/lucidworks/tomcat

Using CATALINA_HOME: /var/www/solr/lucidworks/tomcat

Using CATALINA_TMPDIR: /var/www/solr/lucidworks/tomcat/temp

Using JRE_HOME: ./../jre

Usage: catalina.sh ( commands … )

commands:

debug Start Catalina in a debugger

debug -security Debug Catalina with a security manager

jpda start Start Catalina under JPDA debugger

run Start Catalina in the current window

run -security Start in the current window with security manager

start Start Catalina in a separate window

start -security Start in a separate window with security manager

stop Stop Catalina

stop -force Stop Catalina (followed by kill -KILL)

version What version of tomcat are you running?

In order to start Solr, you need to type the following :

sh lucidworks.sh start

Using CATALINA_BASE: /var/www/solr/lucidworks/tomcat

Using CATALINA_HOME: /var/www/solr/lucidworks/tomcat

Using CATALINA_TMPDIR: /var/www/solr/lucidworks/tomcat/temp

Using JRE_HOME: ./../jre

Now you can check if Solr is running by going there: http://localhost:8983/solr/admin You should see the Solr backoffice admin page.

Now that you have an install ready to be used, lets build a simple Search.

Configuring schema.xml and solrconfig.xml

You can configure Solr to use various cores. That way, the same Solr instance can serve various applications. In order to do that, you can check the following link : http://wiki.apache.org/solr/CoreAdmin

You need to edit the solr.xml, and add the new core :

<solr persistent=”false”>

<cores adminPath=”/admin/cores”>

<core name=”tutorial” instanceDir=”tutorial” />

<!– You can add new cores here –>

</cores>

</solr>

Then, edit the solrconfig.xml file, make sure the right path is set for the dataDir property.

<!– Used to specify an alternate directory to hold all index data

other than the default ./data under the Solr home.

If replication is in use, this should match the replication configuration. –>

<dataDir>${solr.solr.home}/tutorial/data</dataDir>

Make sure also that the PHP and PHPS responseWriters are enabled ! Otherwise, it won’t work !

<queryResponseWriter name=”php” class=”org.apache.solr.request.PHPResponseWriter”/>

<queryResponseWriter name=”phps” class=”org.apache.solr.request.PHPSerializedResponseWriter”/>

Now, edit the conf/schema.xml file and add the following fields within the <fields> node :

<!– Unique Solr document ID (see <uniqueKey>) –>

<field name=”solrDocumentId” type=”string” indexed=”true” stored=”true” required=”true” />

<!– Fields for searching –>

<field name=’id’ type=’integer’ indexed=’true’ stored=’false’ />

<field name=’text’ type=’text’ indexed=’true’ stored=’false’ multiValued=’true’ />

<field name=’title’ type=’text’ indexed=’true’ stored=’false’ />

<field name=’author’ type=’text’ indexed=’true’ stored=’false’ />

<!– Facet fields for searching –>

<field name=’category’ type=’text_facet’ indexed=’true’ stored=’false’ multiValued=’true’ />

<field name=’concept’ type=’text_facet’ indexed=’true’ stored=’false’ multiValued=’true’ />

<field name=’location’ type=’text_facet’ indexed=’true’ stored=’false’ multiValued=’true’ />

<field name=’person’ type=’text_facet’ indexed=’true’ stored=’false’ multiValued=’true’ />

<field name=’company’ type=’text_facet’ indexed=’true’ stored=’false’ multiValued=’true’ />

In this article, we will index and search on articles. An article has an title, text, author and some special metadata such as : category, concept, location, person and company.

Make sure to restart solr every time you do a change on your solrconfig or schema files !

Now it is the time to start with the PHP code.

A simple search controller

References

IMB article on Solr

http://www.ibm.com/developerworks/opensource/library/os-php-apachesolr/

Refer to http://wiki.apache.org/solr/SolPHP?highlight=((CategoryQueryResponseWriter)) for more information.

Who am I?

My name is Bashar Al-Fallouji, I work as a Enterprise Solutions Architect at Amazon Web Services.

I am particularly interested in Cloud Computing, Web applications, Open Source Development, Software Engineering, Information Architecture, Unit Testing, XP/Agile development.

On this blog, you will find mostly technical articles and thoughts around PHP, OOP, OOD, Unit Testing, etc. I am also sharing a few open source tools and scripts.

- Trinzia: Well done, my friend! [...]

- vivek raj: Hello Bashar, It's really good that you wrote this code. but I'm confused some part. can you suppor [...]

- irfan: I saw watch your youtube talk on clean and testable code. By the way very good talk. I was wondering [...]

- Mohamed: Hello bashar, I hope you are doing well. Thank you for your hard work, and thank you for sharing [...]

- alex davila: Hi Bashar is there any pick up example?? Regards Alex Davila [...]

- AWS CloudHSM workshop

- Optimizing definition of inbound rules for security groups in AWS

- Generating set of data

- All 5 AWS Certifications done

- AWS Solution Architect Professional Certification

- Two left

- One more – three left

- Just got my AWS Solutions Architect Certification

- AWS Solution Architect Certification

- Creating a search for your application with Solr and PHP

Categories

- Apache (1)

- MOD_REWRITE (1)

- MOD_SQLALIAS (1)

- AWS (9)

- Design Pattern (5)

- Editor (1)

- Fun and stuffs (2)

- Knowledge (1)

- Logging (1)

- Others (3)

- PHP Framework (2)

- Programming language (18)

- PHP (16)

- Scalability (1)

- Source Control (1)

- Text Mining (1)

- Uncategorized (11)

- Unit Testing (2)

- User Experience (1)

Archives

- April 2021

- November 2017

- September 2017

- August 2017

- July 2017

- June 2017

- September 2016

- August 2015

- February 2015

- September 2014

- July 2014

- October 2012

- November 2011

- September 2011

- July 2011

- April 2011

- December 2010

- May 2010

- April 2010

- March 2010

- October 2009

- September 2009

- August 2009

- June 2009

- May 2009

- April 2009

- March 2009

- February 2009